What is rightsizing? Rightsizing is an ongoing process of adjusting the size, family, and count of resources to match real demand, so you cut spend without breaking SLOs. Done well, it is repeatable and evidence-driven, meaning teams can run it every sprint and verify impact in billing.

In HR, rightsizing usually means workforce restructuring (sometimes called workforce rightsizing). In a cloud company, rightsizing is about infrastructure capacity, so decisions are based on telemetry, ownership context, and cost impact.

You’re here to cut cloud spend without breaking SLOs, and you need a plan you can run every sprint, one that passes PR review and change control. Telemetry says p95 CPU 28%, memory flat, I/O calm, yet the bill keeps creeping:

- CUR, Azure Cost Management, and GCP Billing pile up

- The backlog dodges sizing tickets

- Tags drift

- Commitments twist the math

- Kubernetes requests sit at 2x reality

So, how do we turn right sizing into an operating motion instead of a one-off cleanup, and which signals prove a resize is safe?

Rightsizing in cloud cost optimization

Rightsizing is an evidence-driven process of adjusting cloud capacity to real demand, so a company can reduce spend without breaking SLOs or distorting unit economics. Done as a repeatable motion, it aligns cost decisions with business objectives across the company.

In practice, you change the size, family, and count of resources across compute, Kubernetes, databases, storage, and serverless, so baseline capacity matches what workloads actually need rather than what they were provisioned for months ago.

To make those changes safe, you need three inputs:

- Telemetry (p95/p99 CPU and memory, I/O, latency, errors, queue depth)

- Billing truth (AWS CUR, Azure Cost Management exports, and GCP Billing export)

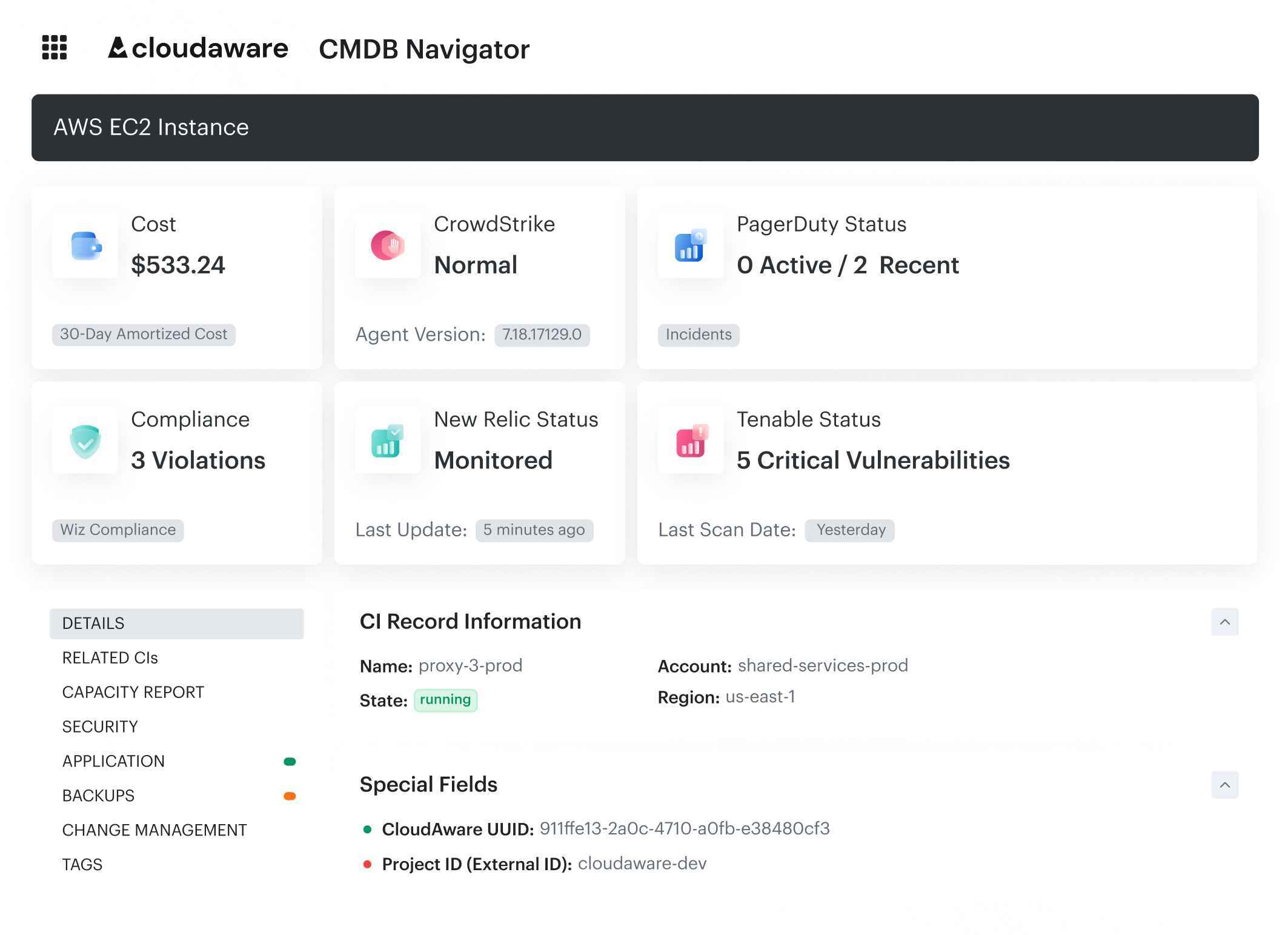

- Ownership context from CMDB and tags like application, environment, owner_email, and cost_center

Why rightsizing matters for your company

Because every week you delay, 30-40% of your compute and storage spend is leaking away. It shows up in bills you can’t explain, finance asking why commitments don’t match, and engineers ignoring yet another “please resize this VM” ticket.

The first win is measurable savings that show up where it matters: in billing views your finance team trusts, with before/after evidence tied to specific resources and owners. When a company makes rightsizing a weekly habit, it stops paying for baseline capacity that no longer matches real usage.

The second win is forecast stability, because right-sized baselines reduce surprise variance and keep cost anomalies from becoming weekly escalations. Finance gets numbers it can plan against, and engineering gets fewer “why did spend jump?” fire drills.

The third win is commitments that map to reality, since rightsizing clarifies steady demand before you lock in RI/Savings Plans/CUDs. That keeps coverage healthy and reduces the risk of discounting idle capacity.

The fourth win is governance that holds: chargeback reflects actual consumption instead of bloated allocations, and the work becomes part of sprint execution rather than a side project. As a result, the company gets cleaner accountability, fewer reversions, and a cost baseline that stays explainable as the platform evolves.

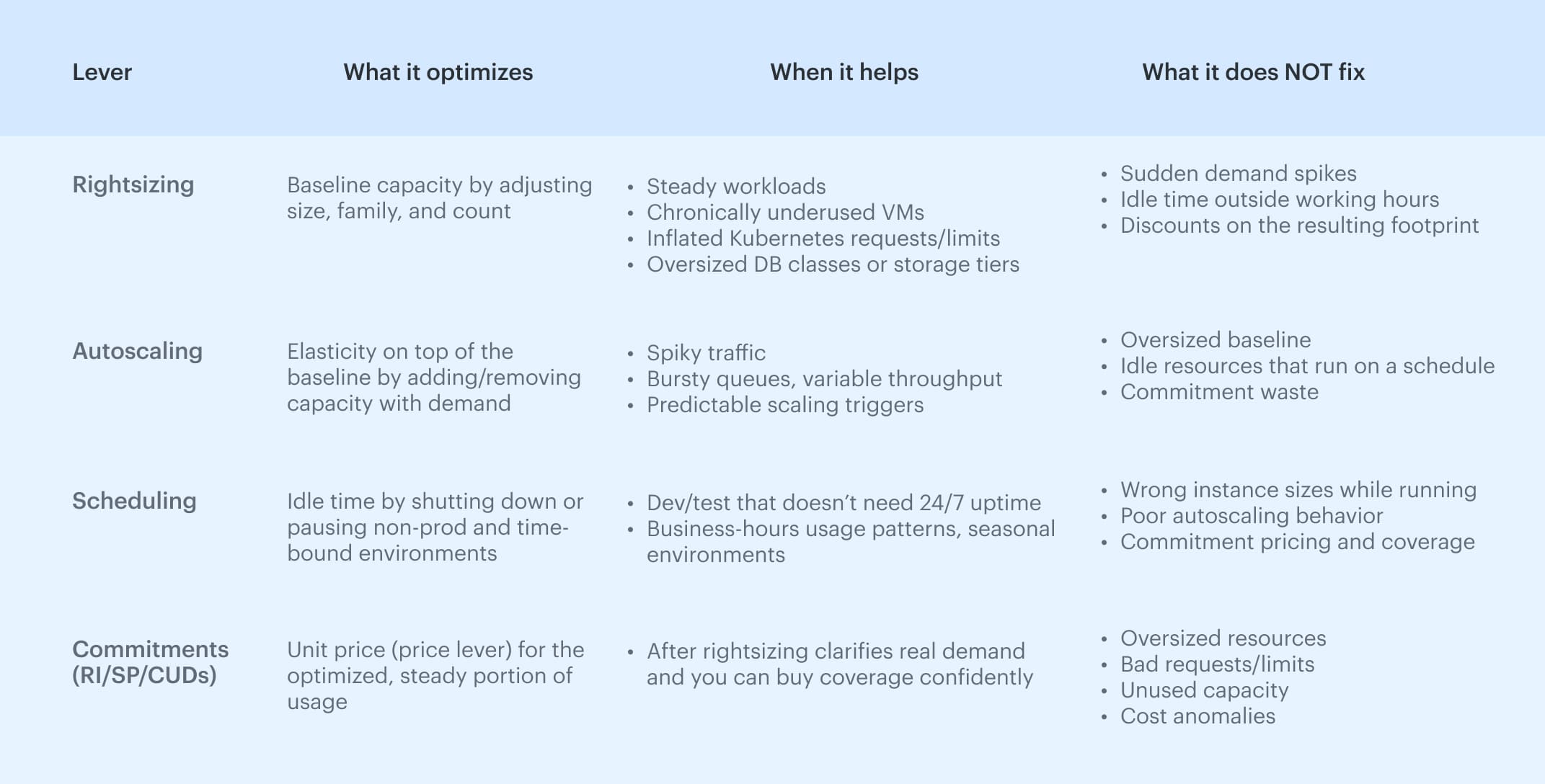

- Right-sizing tunes the baseline

- Autoscaling handles elasticity on top of that

- Scheduling shuts off what’s not needed

- Purchasing captures discounts on the optimized footprint

When these motions run in sync, you get lower $/unit, cleaner forecasts, healthier commitment coverage, and fewer cost anomalies. That's how mature FinOps teams scale without spiraling cloud bills.

Read also: 12 FinOps use cases + solutions for multi-cloud spend

Rightsizing ownership: who owns it, and when to run it

That’s rightsizing in motion, but it only holds when a multi-cloud company treats it as an operating process with clear ownership, not as a dashboard someone checks when the bill spikes.

- Service owners and SRE/platform teams own execution: they can resize EC2/Azure VM/GCE, tighten Kubernetes requests and limits, shift database classes, and move storage tiers without guessing about SLO impact.

- FinOps owns the cadence logic: surfacing candidates, modeling net savings with RI/Savings Plans/CUDs, and managing the cadence so the backlog does not quietly drop every ticket.

Run it on a predictable rhythm and around change events:

- Weekly as a standing sprint item

- Pre/post major releases and traffic spikes

- After migrations

- When autoscaling behavior shifts

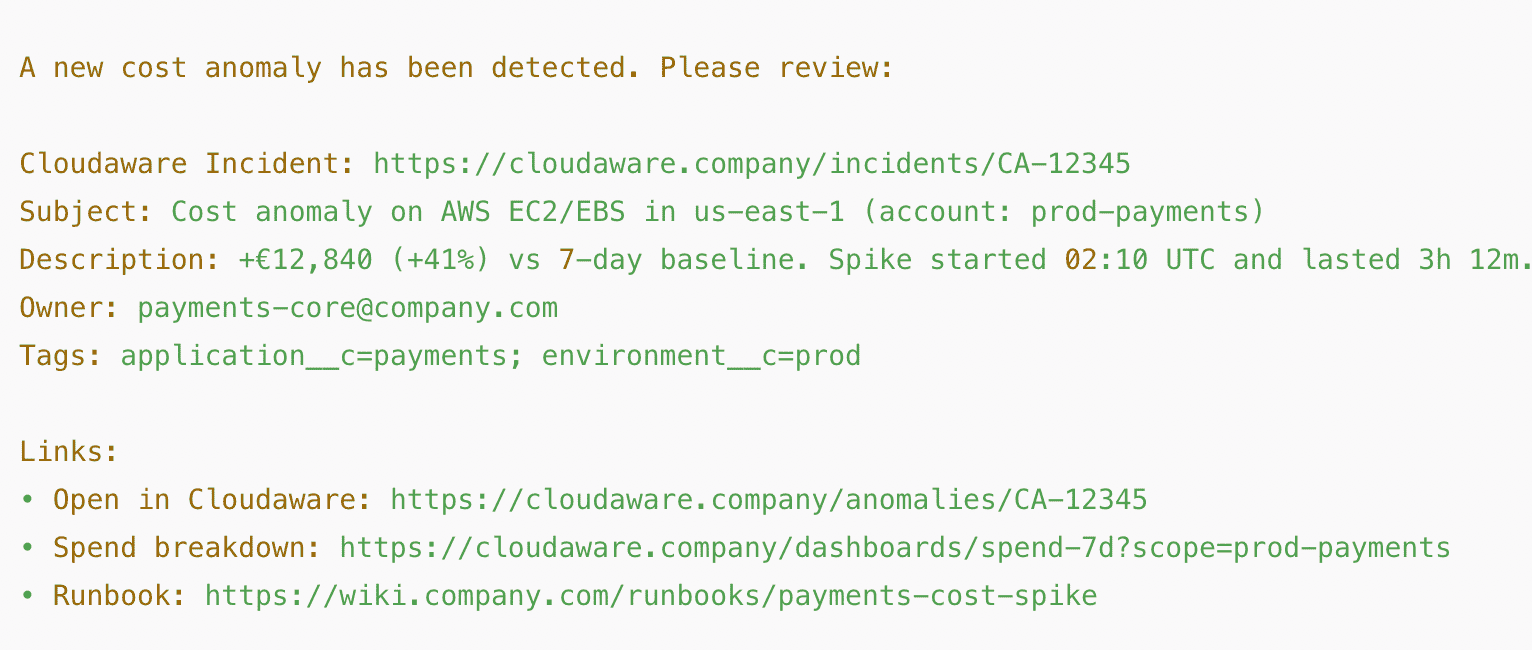

- Before commitment planning or right after cost anomaly detection alerts fire

The workflow stays simple: generate candidates, de-risk peaks and scaling rules, model net savings, open a change with graphs and rollback, ship in a window or blue/green, then verify for 7-14 days using latency, error rate, and cost deltas.

A healthy rightsizing program typically lands 15-35% savings on steady workloads, 60-80% acceptance, a 14-21 day cycle time, and ±10-15% forecast variance, which is why teams formalize it in their FinOps personas and RACI instead of treating it as optional hygiene.

Read also: Multi-Cloud Cost Optimization Assessment Playbook

Signals that prove a resize is safe

Right-sizing is not “CPU looks low, ship it.” It is an evidence-first decision where you prove that a smaller shape still meets SLOs under real load, and you treat saturation signals as first-class constraints, because the fastest way to lose trust in the program is a resize that trades savings for hidden performance risk.

For compute, the safe path is to read multiple signals together over a consistent window:

- p95/p99 CPU and memory

- Load average

- Disk throughput

- Network throughput

- Saturation signals

CPU headroom alone is not a green light if memory pressure is high, caches churn, or I/O is already near its ceiling, because that’s where latency spikes show up first.

For Kubernetes, you validate that requests and limits reflect reality and that the cluster can still absorb variance after the change, which means watching throttling, evictions, and node pressure alongside p95 usage.

If HPA is in play, you also check that scaling remains stable after tightening requests, and if VPA is involved, you make sure it is not fighting HPA in a way that turns “optimization” into constant churn.

For databases, storage, and serverless, the same rule applies: don’t resize blind to bottlenecks that express as latency. DB signals typically center on vCores, IOPS, buffer hit ratio, and query latency; storage decisions depend on IOPS vs tier and lifecycle eligibility; serverless tuning is about finding the lowest memory setting that preserves duration and cost-per-ms.

Read also: 10 Cloud Cost Optimization Benefits: Why It Matters for Your Team

Rightsizing vs autoscaling vs scheduling vs commitments

Cloud cost optimization gets messy when teams mix levers that solve different problems, because a strategic approach depends on doing the right action at the right layer: rightsizing fixes baseline capacity, autoscaling handles elasticity, scheduling cuts idle time, and commitments reduce the unit price after the footprint is correct.

When these are blurred together, business objectives like predictable forecasts and stable SLOs get replaced by ad hoc tweaks and decision-making that doesn’t compound. Run the sequence in this order: right-size first to tune the baseline, then autoscaling and scheduling to handle bursts and idle time, and only then commitment planning to lock discounts onto what your business actually uses; otherwise anomaly detection keeps firing on waste you could have prevented with a cleaner baseline

Run the sequence in this order: right-size first to tune the baseline, then autoscaling and scheduling to handle bursts and idle time, and only then commitment planning to lock discounts onto what your business actually uses; otherwise anomaly detection keeps firing on waste you could have prevented with a cleaner baseline

Read also: Cloud Cost Optimization Framework - 11 Steps to reducing spend in 2026

AWS, Azure, and GCP rightsizing tool map

Provider tooling matters because it determines two things: where your team gets credible sizing recommendations, and how you prove that a change actually reduced cost without any harm.

The names also matter for search intent, because “AWS rightsizing” and “Azure rightsizing” often map to specific native services rather than a generic process, and billing validation typically starts with the same foundation you use for AWS cloud cost management.

| Provider | Recommendations | Billing validation | Telemetry |

|---|---|---|---|

| AWS | Compute Optimizer, Trusted Advisor | AWS CUR (validate with amortized vs blended/unblended views) | CloudWatch |

| Azure | Azure Advisor | Azure Cost Management exports | Azure Monitor |

| GCP | GCP Recommender | BigQuery Billing Export | Cloud Monitoring |

Across all three, the workflow stays consistent: you use the provider recommender to generate candidates, you confirm the savings in the billing export that finance trusts, and you gate the change on p95/p99 signals from the monitoring stack your SREs already rely on.

If you’re running this across multiple accounts, subscriptions, and projects, it helps to standardize scopes and ownership first, which is exactly what teams formalize during a multi-cloud assessment (insert internal link here).

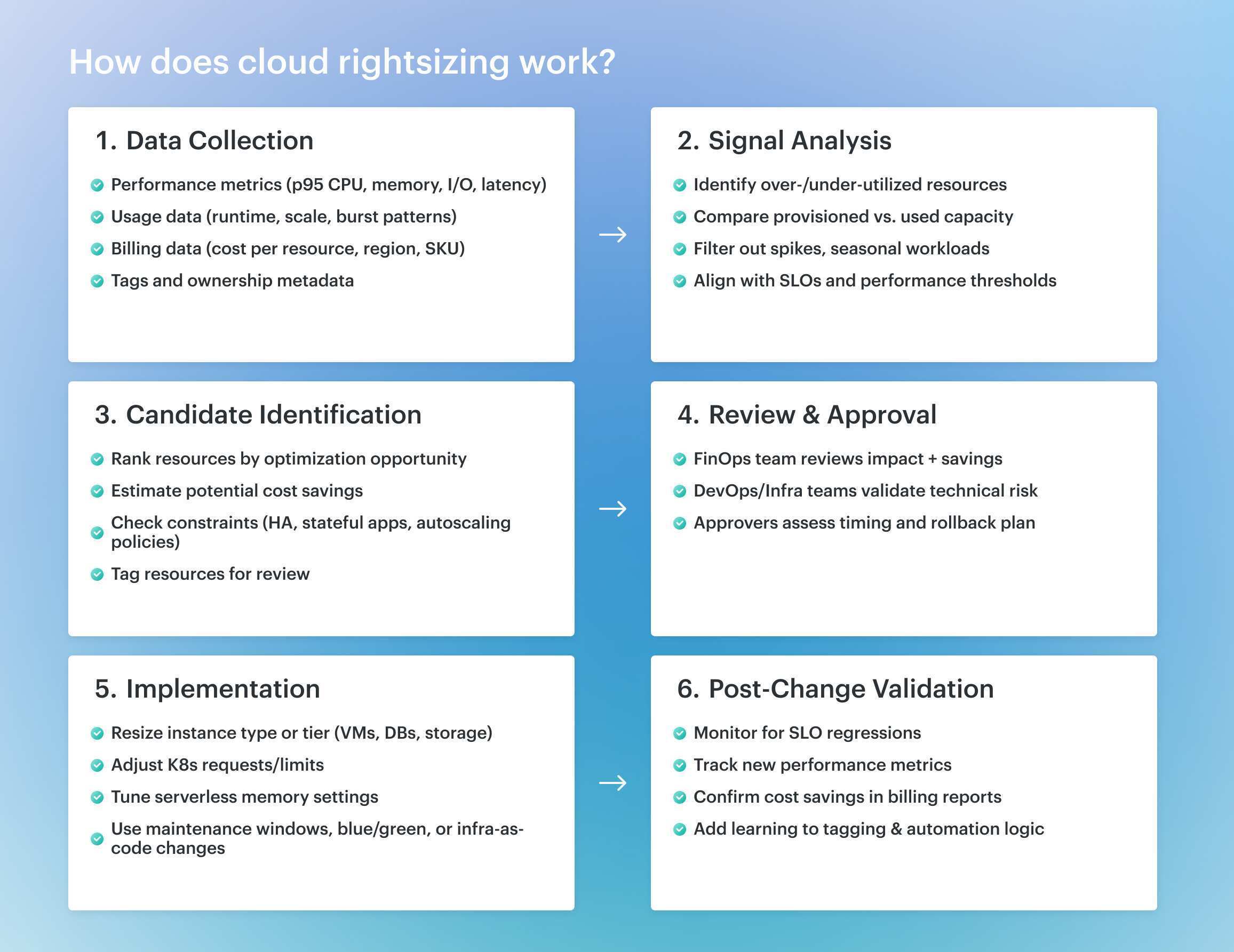

How rightsizing works

Use the diagram below as the mental model: rightsizing is a weekly cycle, not a one-time cleanup, because demand shifts between releases, autoscaling behavior changes, and commitments keep changing what “savings” even means. The goal is to run the same rightsizing process at each stage of delivery with consistent evidence, so decisions survive PR review, change control, and Finance questions.

The goal is to run the same rightsizing process at each stage of delivery with consistent evidence, so decisions survive PR review, change control, and Finance questions.

Data ingestion and enrichment

In Cloudaware’s FinOps flow, everything starts with ingestion and enrichment: billing feeds from AWS CUR, Azure Cost Management exports, and GCP Billing in BigQuery are pulled in, then correlated with live telemetry from CloudWatch, Azure Monitor, Cloud Monitoring, Prometheus for Kubernetes, and APM traces.  Those signals are mapped to real resources using CMDB records and tags like application, environment, owner_email, and cost_center, so every candidate has an accountable owner and a scope you can report on (account/subscription/project, BU/product).

Those signals are mapped to real resources using CMDB records and tags like application, environment, owner_email, and cost_center, so every candidate has an accountable owner and a scope you can report on (account/subscription/project, BU/product).

Automated signal evaluation

From there, evaluation runs continuously over a 14-30 day window rather than a single snapshot, so spikes and seasonal bursts don’t dominate the recommendation. The system scores confidence based on whether p95/p99 CPU and memory, I/O, and SLO-adjacent signals look stable enough to resize safely, which is what keeps the workflow credible for production resources.

Read also: FinOps vs DevOps - How to Make Them Work Together

Candidate generation and ranking

Next comes ranking: candidates are prioritized by projected net savings, already adjusted for RI, Savings Plans, or CUDs, and annotated with risk notes like autoscaling group dependencies or cluster constraints.

The output is not just “this looks oversized,” but “this is worth changing under your current contracts, with known caveats,” which keeps engineering focused on the highest-leverage items.

Read also: FinOps Maturity Model - 7 Expert Moves You Can Steal

Output and team handoff

Finally, the handoff is operational, not aspirational: service owners receive Jira/ADO/ServiceNow tickets with before/after graphs, the target shape, and a rollback plan, plus a weekly digest in Slack or Teams, and nothing auto-remediates without review.  In other words, the loop is Ingest → Evaluate → Rank → Route, and the work compounds over time when teams apply FinOps principles consistently across cost, ownership, and change execution.

In other words, the loop is Ingest → Evaluate → Rank → Route, and the work compounds over time when teams apply FinOps principles consistently across cost, ownership, and change execution.

Implementing a rightsizing program

Most teams fail at proving impact, because commitments change what “savings” means on the bill. For a company running Savings Plans, RIs, or CUDs, a resize can be the right technical move and still show almost no immediate reduction in spend, since you may simply be freeing committed capacity rather than cutting on-demand usage.

That is why costs rightsizing needs a consistent process: every ticket should state whether the change reduces on-demand spend now or reallocates capacity under an existing commitment, and it should reference the view used for validation (amortized versus blended/unblended) so Finance and engineering are looking at the same truth.

One-line rule to keep it honest: immediate_savings = min(reduction_amount, on_demand_above_commitment); freed_commitment = reduction_amount - immediate_savings

The sprint-ready rightsizing plan

- Step 1. Wire billing exports (AWS CUR, Azure Cost Management exports, GCP BigQuery Billing Export) and keep scopes consistent across account, subscription, and project

- Step 2. Sync telemetry from CloudWatch, Azure Monitor, Cloud Monitoring, Prometheus, and APM so latency and error rate travel with the cost story

- Step 3. Establish ownership in CMDB and tags (application, environment, owner_email, cost_center) and backfill gaps with virtual tagging from OUs, subscriptions, and projects

- Step 4. Set guardrails (14-30 day window, exclude peak weeks and stateful control planes, confidence threshold such as ≥90% for prod)

- Step 5. Plug recommendations into Jira/ADO/ServiceNow and a weekly Slack or Teams digest so the work lands in the sprint, not in someone’s inbox

- Step 6. Review candidates weekly by scope and sort by net savings already adjusted for RI/Savings Plans/CUDs

- Step 7. Create a change artifact that an approver can understand in one line and attach before-change graphs plus a rollback

- Step 8: ship safely via a maintenance window or blue/green, and apply Kubernetes request and limit changes through IaC with gradual rollout

- Step 9. Verify for 7-14 days using latency, errors, throttling, queue depth, and the billing delta

- Step 10. Feed the new baseline back into forecasting and commitment planning, then summarize delivered savings, acceptance rate, and cycle time so the program keeps trust

Benchmarks and KPIs

This motion stays funded when it behaves like an operating motion, meaning it produces predictable throughput and evidence, not sporadic “optimization weeks.” That’s why KPIs exist here: they protect cadence, so the company can compare outcomes across teams and remove debates.

- 15-35% savings on steady workloads,

- 60-80% acceptance,

- ≤14-21 day cycle time,

- ±10-15% forecast variance.

5 common rightsizing anti-patterns to avoid

Mistake 1. Chasing CPU charts and ignoring memory, I/O, and latency

Mikhail Malamud, Cloudaware GM:

“CPU at 25% looks safe until p99 memory sits at 82% and the cache is thrashing. I don’t green-light a downsize unless p95/p99 CPU and memory are in range, disk and network aren’t near saturation, and APM shows stable p95 latency with flat queue depth. Attach those graphs to the ticket and you’ll avoid rollback Fridays.”

Mistake 2. Quoting “gross” savings that never hit the bill

Anna, ITAM expert:

“That m6i.4xlarge → m6i.2xlarge recommendation might look like $320/month in savings. But when 85% of that workload is already covered by a Savings Plan, the actual impact is more like $48.

Before you move anything, check the commitment coverage for that scope — account, region, service. If you're under the RI/SP/CUD line, that savings won’t show up on the bill today. What you’re doing is freeing up committed capacity, which is great — but only if someone else can use it.

Otherwise, you’ll end up with clean infra, a bloated commitment, and a finance team that still doesn’t see the win.”

Mistake 3. Treating rightsizing as a dashboard

Daria, our ITAM who lives in FinOps dashboards:

“Recommendations don’t ship themselves. Open a Jira/ADO ticket with owner_email, scope, pre-change graphs, target shape, and rollback. Set team KPIs: ≥60% acceptance and a 14-21 day cycle time. Run a weekly triage, post the wins in Slack, and watch the backlog actually move.”

Mistake 4. Downsizing stateful or control-plane services in risky windows

Mikhail Malamud, Cloudaware GM:

“Rightsizing a message broker, DB primary, or cluster control plane without prep is asking for a 2 a.m. incident. These services aren’t stateless. They don’t recover gracefully when their IOPS or memory gets squeezed mid-peak.

In our flow, we flag these as ‘high-risk’ and route them through a different path.Here’s the checklist we follow:

- Scope the change in a maintenance window — never during rollout or scale-up periods.

- Use blue/green or test on a single node first (canary).

- Attach pre-change SLO metrics (latency, error rate, throttle events).

- Run a 7-14 day verification after the resize before closing the ticket.”

Mistake 5. Kubernetes requests/limits set 2-3x reality

Anna, ITAM expert:

“Letting Kubernetes requests and limits drift with no ownership, because what starts as a safe buffer becomes 2-3x over-provisioning and silently burns node-hours. The fix is to baseline requests from 14-30 days of p95 usage, keep limits with modest headroom, validate throttling and evictions after change, and make namespace ownership explicit so the work repeats instead of regressing.”