You’re here to get control over cloud costs — and not just “see” the numbers. You want to understand them. What caused that spend spike? Are your RI/SPs actually saving money? Why does Finance still ask for CSVs when you have dashboards?

Here’s the kicker: cloud cost analysis isn’t hard because the data’s hidden. It’s hard because the data is messy — across AWS CURs, Azure EA exports, GCP BigQuery billing, tags that don’t align, and Kubernetes clusters with no unit metrics.

We built this guide from the trenches. Real FinOps managers, DevOps leads, and cloud architects at Cloudaware shared what works — folks supporting companies like Boeing, Coca-Cola, and NASA. We folded in insights from over 10 years of platform experience, client patterns, and public benchmarks.

Here’s what you’ll walk through:

- How do you set up AWS/Azure/GCP exports for accurate cloud cost analysis?

- How do you scope spend using tags, labels, and CMDB data?

- What’s a reliable cloud spend analytics dashboard layout?

- How do you go from spike to root cause in 5 clicks?

- What policies actually detect waste worth fixing?

- How do commitments change your effective cost?

- How do you forecast with confidence — and catch variance early?

- What metrics help prove cloud cost optimization without spreadsheets?

- How do you bake guardrails into delivery, not just audits?

Let’s get into the step #1 👇

Connect AWS/Azure/GCP billing exports for cloud cost analysis

Before you can do any meaningful cloud cost analysis, the billing data has to be clean, complete, and scoped correctly. And I don’t mean a good-looking dashboard — I mean the actual billing feeds underneath. If they're broken, late, or incomplete, your cloud spend analytics will be wrong from day one — and yes, Finance will notice.

The good news? In Cloudaware, you don’t have to build ingestion pipelines or manage export schedules. The platform integrates directly with AWS, Azure, Oracle, Alibaba, and GCP — and reflects your real-time billing data, daily and automatically.

Here’s what that connection includes (Cloudaware handles all of this behind the scenes):

✅ AWS

- Cost and Usage Report (CUR) with hourly granularity.

- Resource IDs enabled.

- Amortized, blended, and unblended cost columns.

- Output in Parquet format for downstream analytics.

- Ingested via read-only cross-account role (no manual ETL).

Without amortized data, you can’t see RI/SP costs accurately. Without resource IDs, cost allocation and filtering are incomplete.

✅ Azure

- Cost Management + Billing Export to a Storage Account.

- Export type: Actual Cost, frequency: Daily.

- Permissions: Cost Management Reader + Reader roles at subscription scope.

- Cloudaware validates export structure — catching EA discount gaps or flattened pricing.

✅ GCP

- BigQuery Billing Export, set to daily, partitioned.

- Scoped by billing account (not just project).

- Full line-item granularity.

- Optional: add

instance-namelabels to VMs for instance-level cost attribution.

Once connected, Cloudaware pulls billing + usage data automatically, updates it daily, and normalizes it across clouds. No manual syncs. No missed usage types. And all three providers show up in one cost model — scoped by service, tag, account, or team.

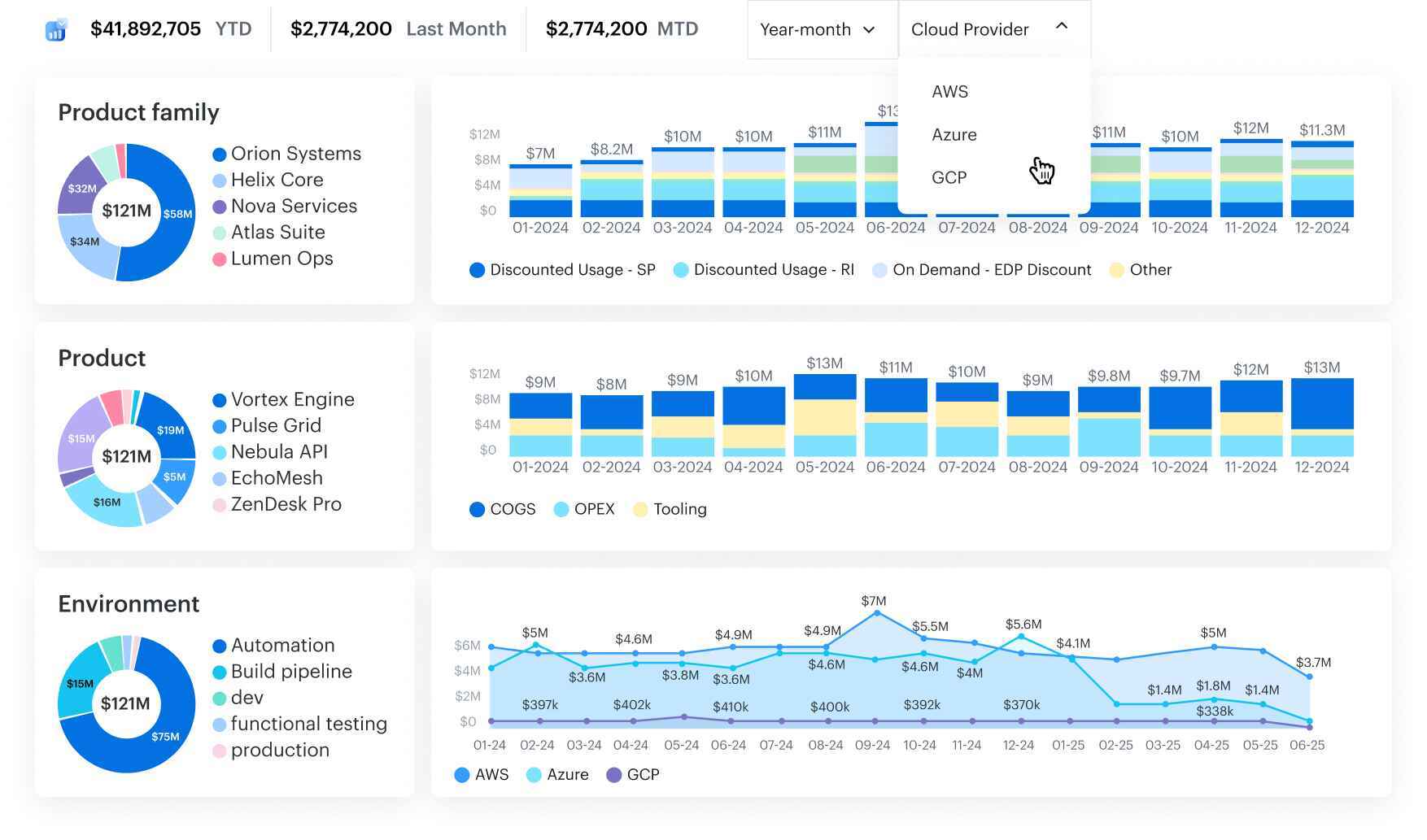

Here is an example of a multi-cloud FinOps dashboard in Cloudaware:

Any questions on how it works? Ask our experts.

That’s the foundation. You’re not just looking at numbers — you’re ready to trust the data. And now it’s time to make that spend mean something 👇

Read also: 7 Best Cost Allocation Software 2025: Tools, Features & Pricing

Define ownership with tags and CMDB Scopes for accurate cost allocation

Now that your billing exports are connected, let’s talk about ownership — because that’s where most cloud spend analysis breaks down.

Usually, when tags are missing, inconsistent, or just plain wrong, your cost data turns into noise. The finance team sees “Other,” engineering sees random spikes, and you end up mediating budget blame instead of fixing the root cause.

Start writing a tagging strategy that matches how your org is structured. At a minimum, define:

application__c– the service or workload name,environment__c– prod, dev, staging, etc.,owner_email__c– actual point of contact.

Cloudaware enforces these keys across clouds — and when they’re missing, it fills the gaps using metadata from the CMDB, resource configs, and even project/account naming patterns. You’ll still see complete cost allocation per team or service — even when native data is a mess.

Any questions on how it works? Ask our experts.

And here’s where it gets more powerful: Cloudaware doesn’t just enrich tags inside its own system. It pushes them back into your cloud. So when you open AWS Cost Explorer or Azure Cost Analysis, you still see clean, consistent data. That means Engineering, Finance, and Product are all looking at the same mapped spend — no reconciliation needed.

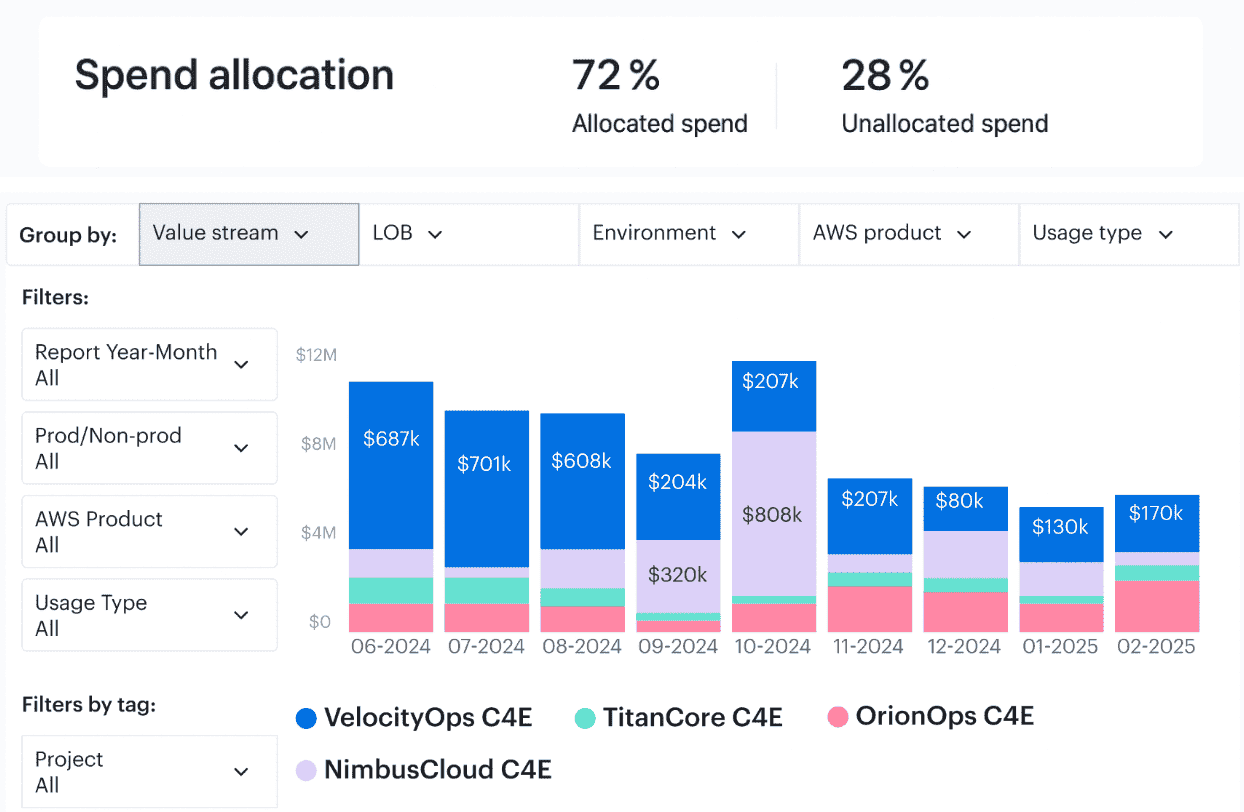

This unified ownership view forms the basis of Scopes. Scopes can be anything:

- All production workloads in EMEA.

- Every resource tagged

bu=marketing. - Or every GCP service under

payments-team@acme.com.

Scopes let you slice cost by business unit, team, environment, or product line — across AWS, Azure, and GCP — without rerunning exports or juggling filters.

That’s what makes chargeback/showback feasible. And it's the foundation for every cost conversation you’re about to have.

Read also: FinOps Personas - Roles, RACI, and KPIs for Real Teams

Now let’s build a cross-cloud cost dashboard using these Scopes 👇

Build a cross-cloud cost dashboard with filters, trends, and unit economics

Most “cloud dashboards” fall apart the second someone asks, “Which team owns this spike?” or “Why is our unit cost up 14%?”

This is where cloud spend analytics needs to start doing real work — not just visualizing totals, but telling you what changed, where, and who needs to care.

You’re pulling in billing data from AWS, Azure, and GCP. That means you need a cross-cloud dashboard that normalizes cost details, aligns with your Scopes, and filters cleanly by:

- Cloud provider

- Account / subscription ID

- Region

- Scope (based on tags, labels, or CMDB relationships)

- Service (EC2, S3, Azure SQL, GCP BigQuery, etc.)

- Environment (prod/dev/staging)

Examples of the Cloudaware FinOps dashboards with CMDB-enriched data:

Any questions on how it works? Ask our experts.

Cloudaware auto-tags and pre-scopes all of this, so you’re not creating filters from scratch or slicing up exports.

Start with tiles that surface key trends:

- MTD spend vs. previous month.

- Week-over-week % change by Scope.

- Top 10 Scopes / Services by spend.

- Forecast vs. actual.

- Unit economics —

$ / request,$ / instance hour,$ / vCPU-hour,$ / GB stored.

Every data point is linked back to the actual resource. So when something spikes, you can click straight into the Scope, service, or region — no pivot tables required.

Trend analysis becomes way more actionable when it’s tied to real events:

- Deployment timelines.

- RI/SP purchases.

- Infrastructure changes.

- Budget gates or policy exceptions.

Cloudaware supports all of that: trends over 30/60/90 days, custom annotations, and scheduled exports for Finance, SRE, or product teams.

This dashboard becomes your cost narrative. It helps you analyze costs in context, spot regression, and explain impact before Finance has to ask.

And when that spike does land? You’ll want to act fast. Let’s walk through how to go from anomaly to root cause in five clicks.

Read also: 6 FinOps Domains - The Essential Map For Cloud Spend Control

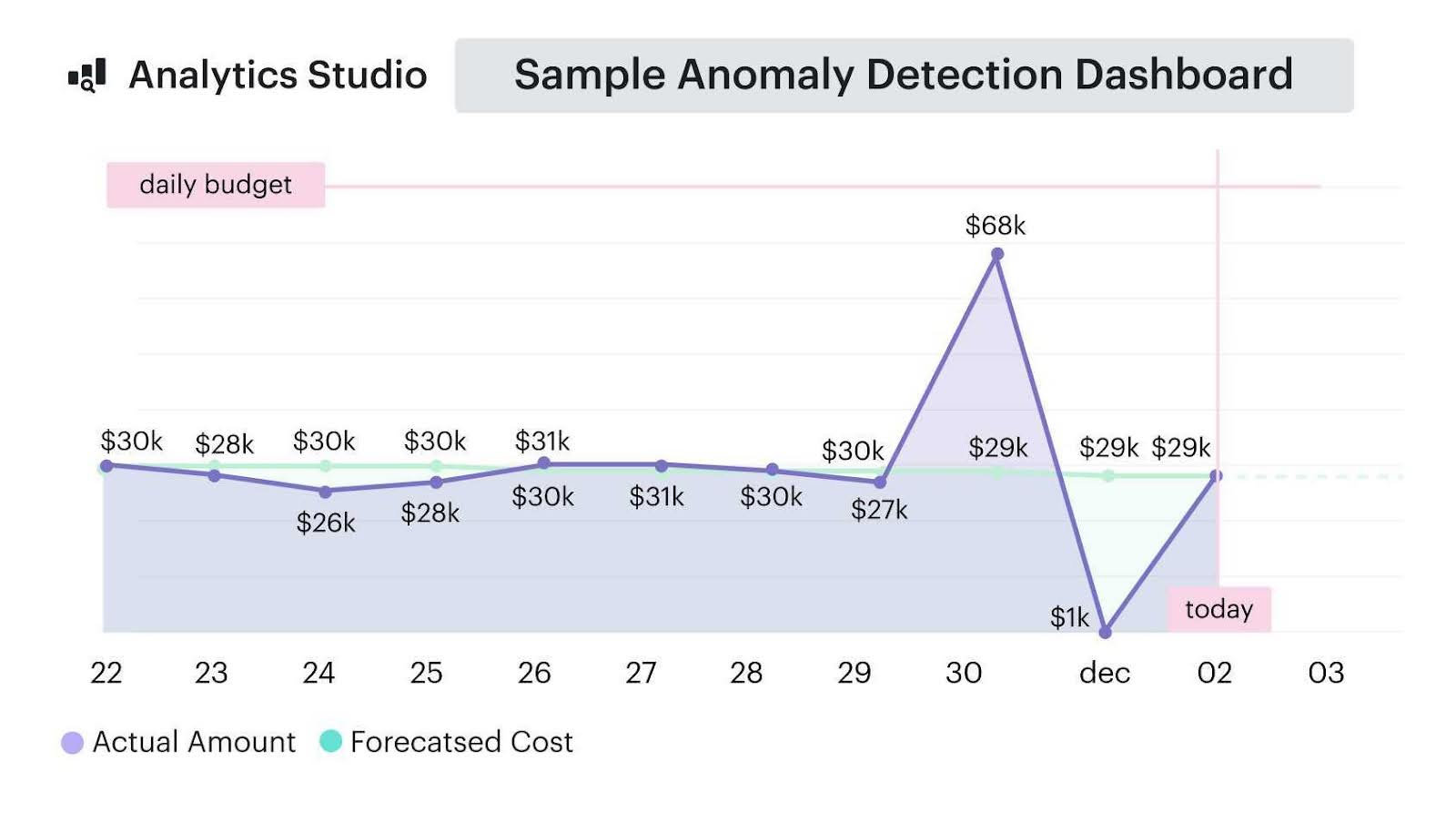

Investigate daily spend spikes

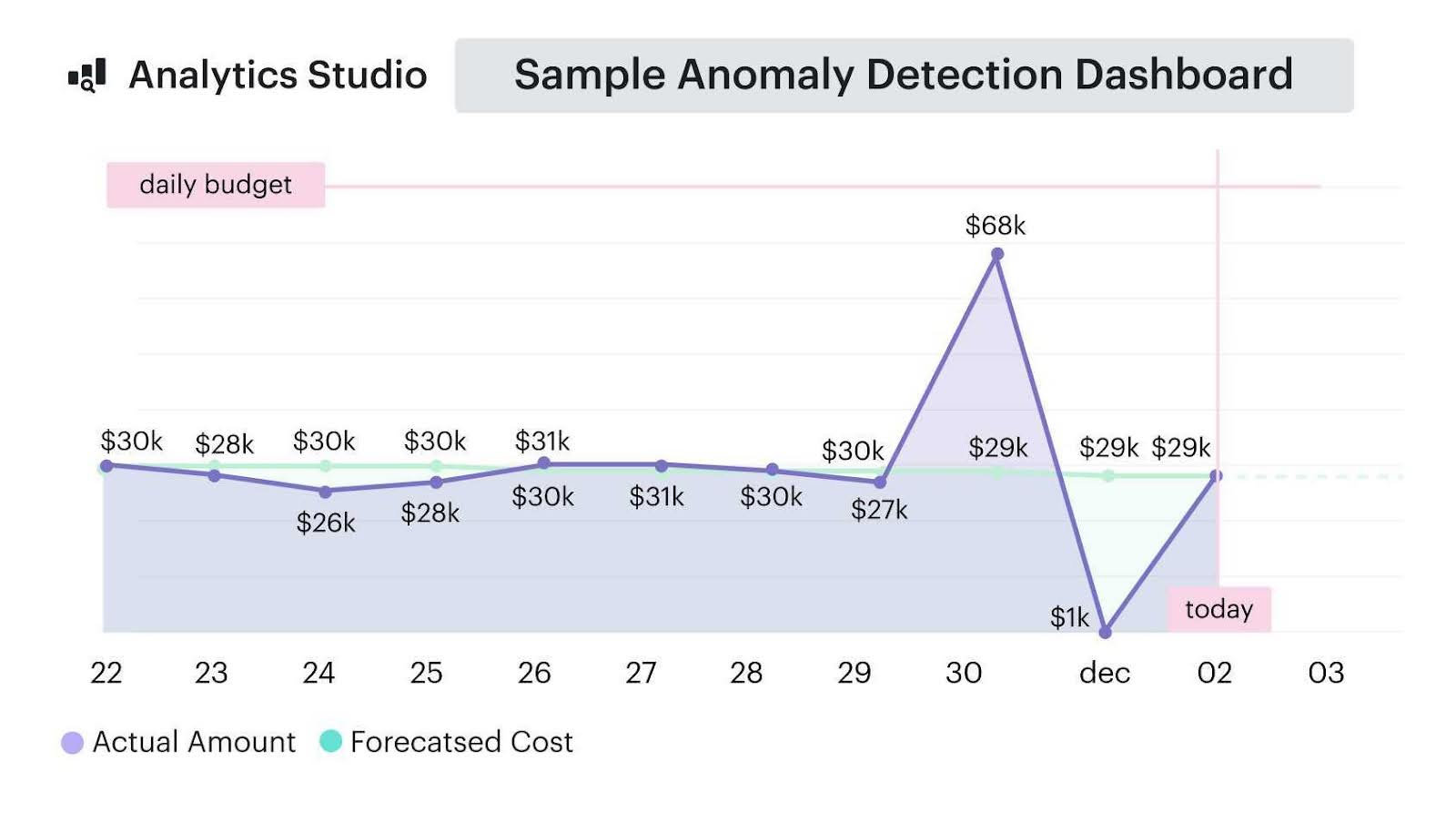

If you’ve ever been blind sided by a “Why is our spend up 12%?” email from Finance, you already know: cloud cost analysis needs to surface anomalies before they hit your CFO’s radar.

Daily deltas and cost spike analysis aren’t optional — they’re the signal layer FinOps experts like Mike Fuller and J.R. Storment call out as foundational in anomaly detection. In fact, FinOps maturity benchmarks say your MTTA (mean time to acknowledge) should be under 2 hours at the Walk stage.

So here’s how to build that into your workflow:

Cloudaware runs anomaly detection automatically across your cloud cost data — daily, per Scope, service, and environment. That means the moment Azure consumption in prod-eu jumps 18%, or GCP BigQuery usage doubles overnight, it gets flagged with context. Not just “it changed,” but where, what, and who.

Here’s the actual 5-click investigation workflow:

- Open Daily Changes: Filter by ≥10–15% variance.

- Zoom into Scope: These are tag- or CMDB-backed groupings (e.g.,

app=checkout,team=infra). - Drill by service and region: EBS in

us-east-1? Data Factory inwesteurope? - Spot the offender: Cloudaware shows the exact resource, SKU, and usage delta.

- Click to route: Create a Jira ticket or send a Slack alert with full context.

Every anomaly gets a confidence score and probable cause tag: usage pattern, commitment mismatch, pricing change, or provisioning drift. So you're not chasing false positives or wasting cycles on data noise.

An example of the Anomaly Detections dashboard element from Cloudaware:

Anomaly detection report element. See it live

This isn’t just nice to have. This is how you hit KPIs that actually move the needle:

- Budget breach detection within 24 hours.

- <2 hour MTTA.

- Variance < ±15% on forecast.

- Cost optimization tickets generated within one sprint.

And more often than not? That spike is the tip of a longer-term issue: idle infrastructure, overprovisioned instances, forgotten storage.

Next, we’ll show you how to turn those signals into savings 👇

Detect waste and quantify savings with policy-driven recommendations

The fastest cloud cost savings usually come from waste elimination before pricing strategy — Mike Fuller calls this “*removing gravity before adding wings.*” This is where policy-driven cost analysis tools matter — because idle resources aren’t loud.

They don’t spike. They just bleed money quietly.

Cloudaware runs a continuous policy library scan against your usage and billing data across AWS, Azure, Oracle, Alibaba, and GCP. That includes patterns like:

- Unattached EBS volumes older than 7 days

- Idle Azure VMs with <5% p95 CPU over 14 days

- Underutilized Cloud SQL or RDS instances

- Overprovisioned K8s requests vs. actual usage

- Orphaned elastic IPs, snapshots, or load balancers

Each recommendation comes with:

- Net savings estimate (amortized, not just list price)

- Confidence rating (based on historical p95 usage)

- Risk flag (e.g., stateful workloads, downstream dependencies)

You can immediately route actions to Slack, ServiceNow, email, Jira, or backlogs, or snooze with justification — which matters later for audit evidence and FinOps accountability metrics.

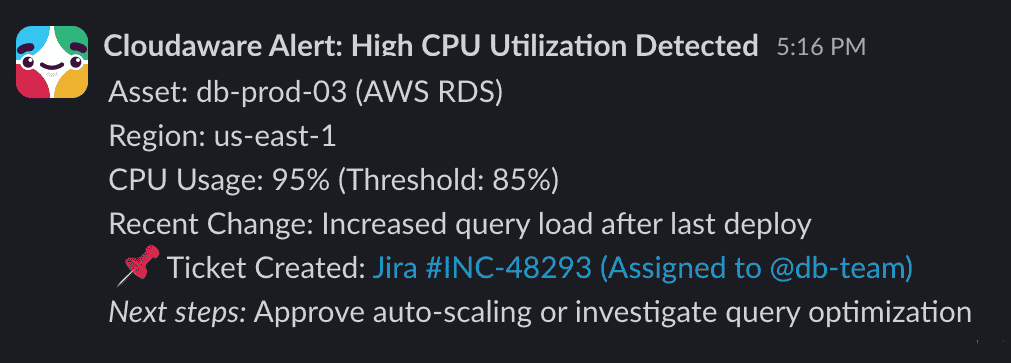

Example of the Slack notification from Cloudaware:

This is also where teams begin to track acceptance rate, savings realized, and time-to-remediation — the FinOps Foundation lists those as core KPIs of effective optimization at the “Walk” maturity level.

And once silent waste is under control, you’re ready for a next step — where we answer the question every CFO eventually asks:

“Are we actually getting value from our RI/SP/EA commitments?”

That’s where effective cost — not just list price — becomes reality.

Read also: 13 Cloud Cost Optimization Best Practices From Field Experts

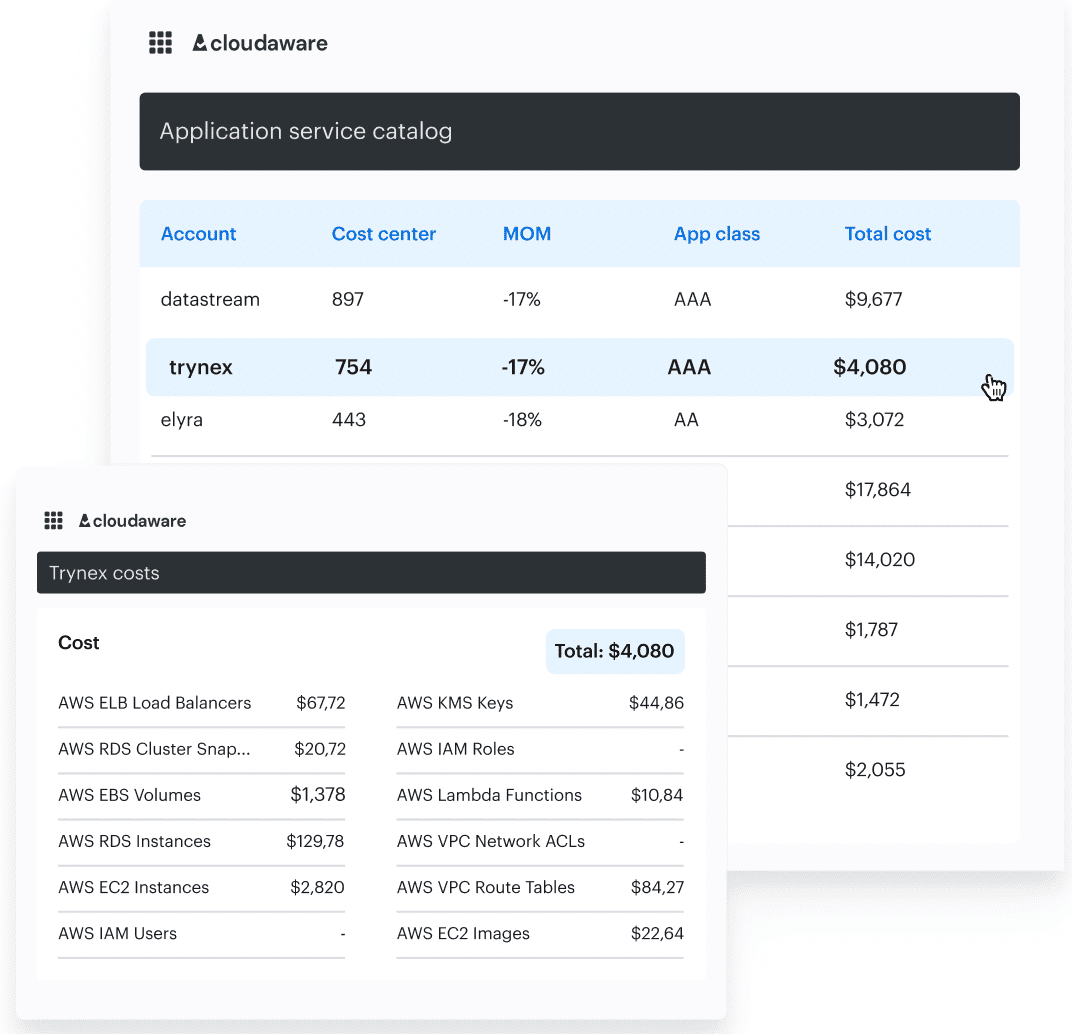

Analyze RI/SP/EA commitments to calculate amortized “effective cost”

This is the step where a lot of teams get caught. Finance thinks you saved $50K. Engineering doesn’t feel it. And AWS still bills you like normal.

That’s because list-price savings don’t matter — only the amortized effective rate does. The FinOps Foundation calls it the *“only number worth defending in an executive meeting.*”

So here’s how to do it right:

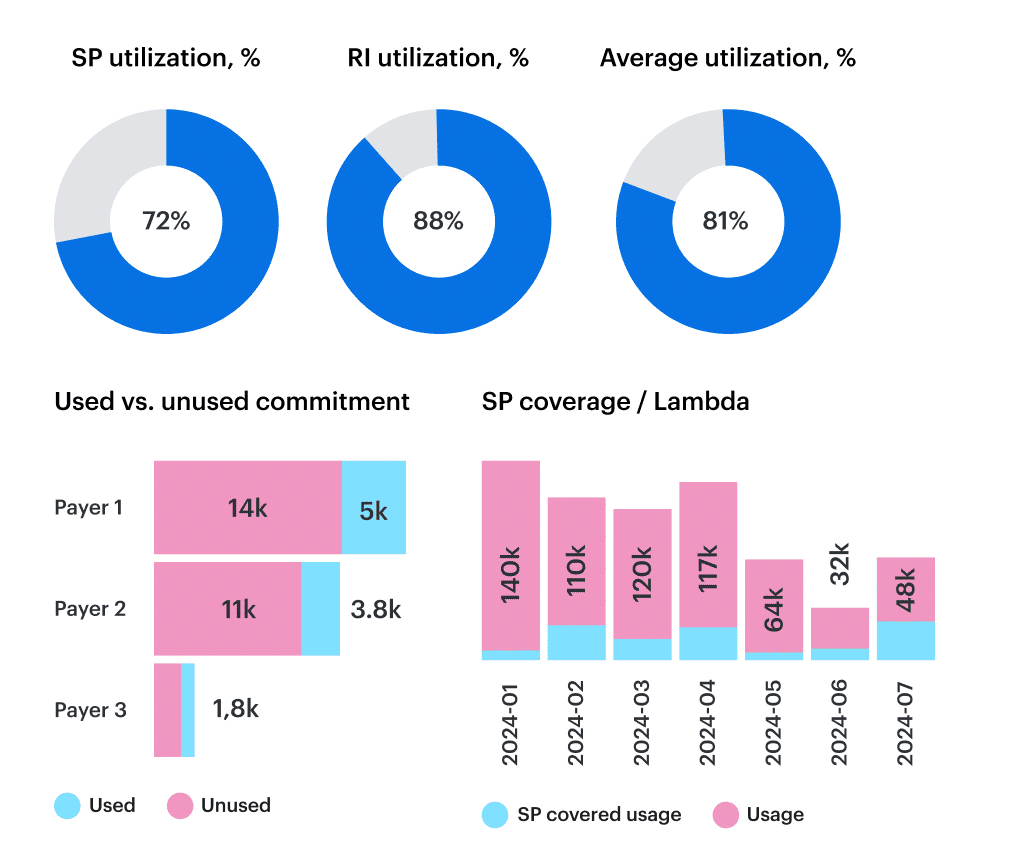

You want three commitment metrics, automatically tracked per Scope — not just globally:

- RI/SP coverage — % of eligible usage that’s covered (per region + instance family).

- Utilization — % of your commitment you’re actually consuming.

- Amortized effective rate — what you truly paid per hour/$/unit after discounts spread across the term.

Cloudaware ingests AWS CUR, Azure EA, and GCP CUD data with amortization turned on — and ties it directly to Scopes, so you don’t just know your SP is 82% utilized — you know which team is burning or wasting it.

Element of the cloud spend optimization dashboard in Cloudaware. Schedule a demo to see it live.

You’ll immediately see if:

- AI experiments burst into on‑demand instead of covered instance types.

- Azure EA discounts stopped applying after a region migration.

- GCP CUDs are rotting unused while spot is overused elsewhere.

This is a maturity gate. At Walk/Run level, the FinOps Foundation expects you to evaluate commitment ROI by business unit — not just cloud account.

Speaking of forecasting — that’s where we’re heading next: how to set budgets by Scope, model future spend, and alert early when you’re about to blow past it.

Create forecasts and budgets, then monitor variance with threshold alerts

This is where cloud spend analysis shifts from “explaining the past” to protecting the future.

Forecasting isn’t just a finance exercise. It’s a FinOps control loop. And the FinOps Foundation makes it clear: at Walk maturity, every team with meaningful usage should own a budget, a forecast, and a variance alert.

You don’t need perfect foresight — you need predictable error. If your forecast accuracy swings ±25–30%, your RI/SP decisions are disconnected from reality. And Finance knows it.

Here’s how high-functioning teams do it:

In Cloudaware, forecasting uses 30/60/90-day usage trends, broken down by Scope (tags, labels, CMDB relationships). That lets you model spend at the app, team, environment, or business unit level — across AWS, Azure, and GCP.

FinOps forecast report element. See it live

Budgets are then layered on top with thresholds that trigger action:

- 80%: Quiet flag.

- 90%: Auto-alert to Scope owner via Slack or Jira.

- 100%: Escalation, reforecast, or scope review.

You’ll track variance alerts, budget breach frequency, and even MTTV (mean time to variance detection). These are the KPIs J.R. Storment says define real FinOps maturity — because you’re not guessing, you’re adjusting in real-time.

This also feeds right into capacity planning, hiring timelines, and commit adjustments. If a team forecasts $48K and ends up at $65K, it’s either scale, sprawl, or tagging drift.

And the quiet culprit in a lot of budget overages? Kubernetes.

Map Kubernetes requests/limits to spend and rightsize without breaking SLOs

Kubernetes is the black box of cloud cost analysis. You don’t just see “EC2 spend” or “GKE usage” — you see clusters, nodes, pods, and namespaces… without dollar signs. And when requests and limits aren’t tuned? You’re either overspending or missing your SLOs.

The fix starts with visibility.

Cloudaware maps K8s requests/limits to real cost data across AWS, Azure, and GCP. You can slice usage by namespace, cluster, or deployment, and tie that back to actual cloud spend — not theoretical “CPU hours.” It’s full namespace showback, scoped the same way you handle your other cost analysis: by team, app, or business unit.

From there, it’s all about finding over-request waste. The platform looks at p95 usage over a rolling window — say, 14 or 30 days — and flags workloads requesting 2–3x what they actually need.

You’ll see things like:

payments-proddeployment requesting 4 vCPU, using 1.2 at p95.ml-training-stagingpods reserving 16GB RAM, averaging 4GB.- SLO coverage still green, even after cut recommendations.

Every recommendation includes the savings estimate, risk rating (based on p95/latency thresholds), and target configuration to rightsize cleanly.

Read also: What Is Rightsizing in Cloud Cost Optimization? Pro Insights

This gives platform teams something they rarely have in Kubernetes: confidence. Confidence to scale down requests without breaking SLAs. Confidence to commit capacity. Confidence to make cost and performance part of the same conversation.

And the best part? Once your clusters are sized right, you don’t need to explain the same numbers over and over. You can put that data where it belongs — in a scheduled report 👇

Automate reporting: scheduled finance/engineering summaries

This is the point where FinOps stops being reactive.

By Walk maturity, you shouldn’t be pulling reports on demand — you should be delivering scheduled, role-specific summaries that stakeholders expect and actually act on.¹

Because the pattern is always the same: The spend spike gets noticed in the MBR, not before. Finance wants a one-slide answer by 4pm. Engineering wasn’t even looped in. So we fix that here — by automating cost reporting into the rhythms people already run on.

In Cloudaware, that means you generate recurring, scoped reports like:

For Finance (monthly or MBR-ready):

- Executive summary of top Scope variances.

- Effective cost per cloud and per business unit.

- Forecast vs budget variance (with confidence band).

- RI/SP/EA utilization rollup.

For Engineering / product owners (weekly):

- Spend by environment (prod/dev/test)

- Anomalies flagged with owner routing metadata

- Rightsizing: accepted vs ignored vs snoozed

- Kubernetes over-request findings + risk level

Delivered automatically — via email, Slack, or directly embedded into MBR/QBR decks. No manual exporting. No guessing what view someone else filtered by.

This is where trust is built — and execs stop asking “can I get the numbers?” and start asking “should we change the plan?”

Because that’s the real point.

Next, we tighten the loop — so cost awareness isn’t a report, it’s a guardrail.

Read also: 10 Cloud Cost Optimization Benefits & Why It’s a Must In 2026

Enforce governance: policy-as-code checks to prevent cost regressions

Here’s the dirty secret of cloud spend analysis: even when you optimize today, the waste creeps back in by next sprint.

The FinOps Foundation calls this the “regression loop”, and it’s exactly why governance is a Walk-level requirement in the FinOps maturity model.

Because what’s the point of cleaning up $12K in idle RDS if someone spins up an untagged db.r5.12xlarge tomorrow — and no one sees it until the bill lands?

This is where policy-as-code becomes your safety net.

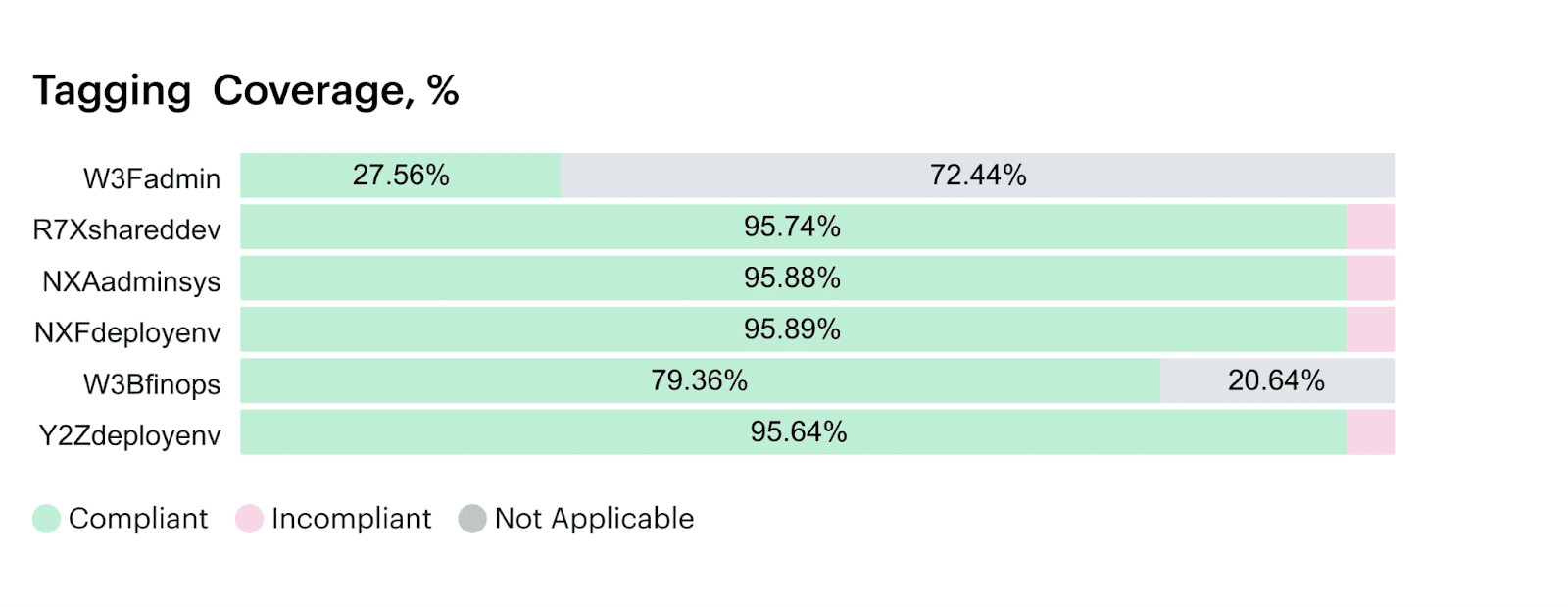

In Cloudaware, policies are versioned records stored right in the CMDB. You set thresholds — like only t3.medium allowed in dev, require owner_email__c, or tag compliance > 95% — and hook them into your workflow.

That means:

- CI checks block cost regressions before merge

- Slack alerts flag policy violations in real time

- Jira tickets get auto-created for ignored findings

- Auto-remediation kicks in when usage drifts from policy

Each policy action is tied to a Scope, so teams see the exact rule, impact, and owner without context-switching. You can even report on policy violations over time, which gives you KPIs like:

- Cost delta from rejected merges.

- Forecast variance due to missed policies.

- % of tagged resources per cloud

Example of the dashboard with tagging coverage within Cloudaware. Schedule a demo to see it live

Mike Fuller called this out at FinOps X: “Governance isn't the audit log. It's the runtime.”

This step moves FinOps from detective work to proactive enforcement — cost guardrails that live where engineers already work.

And once you’re here? Picking the right tooling becomes more than feature checklists. You need platforms that support this level of control, context, and accountability out of the box.

Read also: 12 FinOps use cases + solutions for multi-cloud spend

How to choose the best cloud cost analysis tools to make it real?

So you've cleaned your data, scoped your spend, enforced your policies — and now you're wondering: “What tools actually support all this without duct tape?”

The truth is, most cloud cost analysis tools will give you a dashboard. A few will track daily deltas. But very few can handle the real FinOps lifecycle across multi-cloud, multi-team setups where budgets, RI/SPs, anomaly routing, and showback all need to work together.

Here’s what to look for — based on what actually breaks at scale:

Must-have criteria for modern FinOps tools:

- CMDB context — Link cost data to business ownership even when tags are missing. Should support tag enrichment, inferred ownership, and relationship mapping across services.

- Cross-cloud analytics — Full support for AWS CUR, Azure EA, and GCP BigQuery with amortized & blended cost columns.

- Scoped filtering — Slice spend by team, app, environment using tags, labels, or CMDB-backed Scopes — not just account IDs.

- Commitments view — Show RI/SP/EA coverage, utilization, and amortized effective rate per Scope and service.

- Daily anomaly routing — Alert owners in Slack, Jira, or ticketing tools with context-rich cost data and root-cause hints.

- Live dashboards — Support top-down and bottom-up views, scoped by stakeholder, updated daily, and exportable for execs.

- Forecasting + budgets — Create usage-based forecasts and monitor variance with real-time alerts per Scope.

- Kubernetes spend mapping — Track requests vs. p95 usage per namespace, deployment, or pod with $ cost overlay.

- Policy-as-code integration — Enforce guardrails like tag policies, budget gates, and approved instance types at deploy time.

- Scheduled MBR-ready reports — Auto-deliver stakeholder-specific summaries to Slack, email, or exec decks — no pivoting required.

- Audit-friendly history — Track who changed what, when, and why — for every cost policy, threshold, or tag fix.

So if you’re evaluating cloud cost analysis tools right now, don’t stop at “does it have a dashboard?” or “can it pull AWS CUR?” Ask: Can it make this real across engineering, finance, and product — without custom scripts or twelve browser tabs?

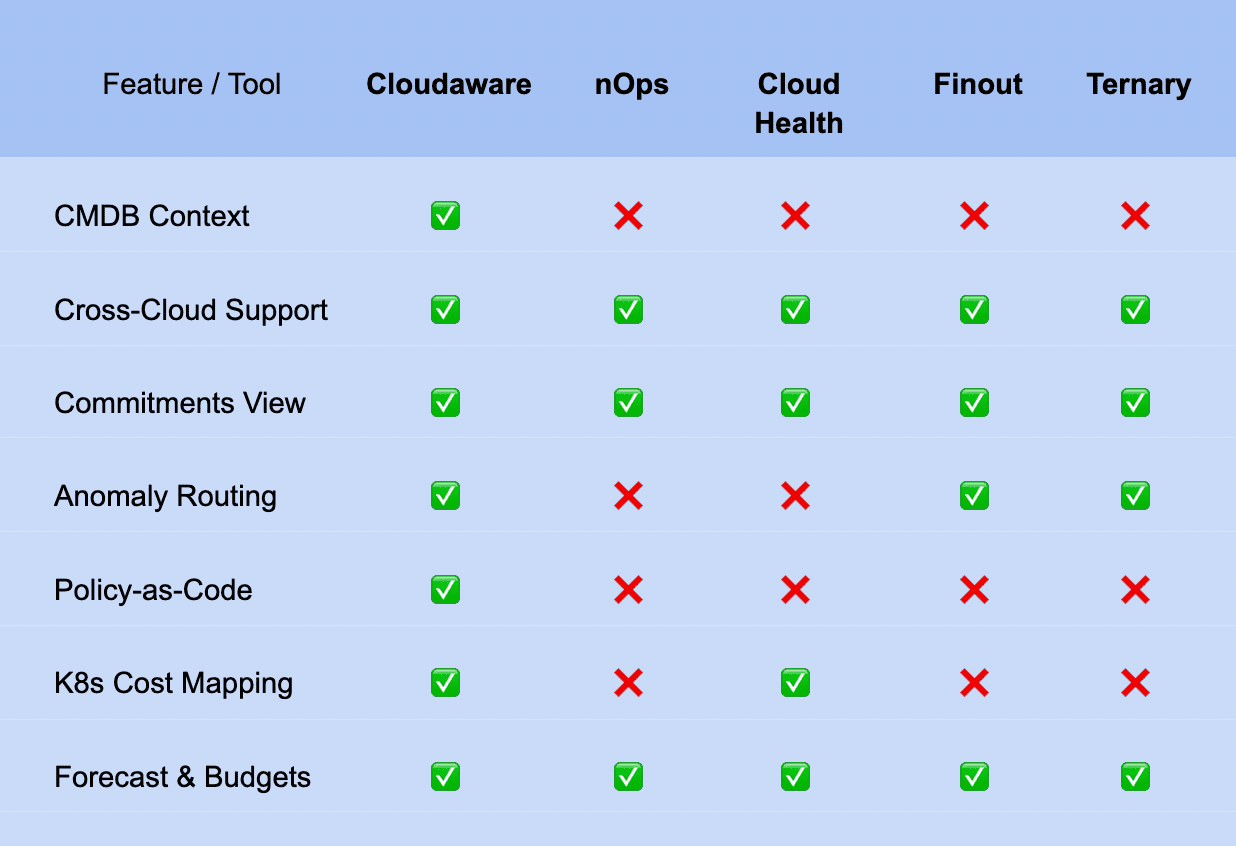

Here’s how five of the most talked-about tools stack up when you hold them to the criteria that actually matter in day-to-day FinOps work:

5 cloud cost analysis tools compared

If you’re looking at this table and thinking, “Okay… but how does this fit my org?” — that’s the right question.

Tool checklists are helpful. But real FinOps success depends on how those cost optimization tools work inside your team structure, your budgets, your usage patterns, and the blockers you’re already dealing with.